Research, Reproducibility, Data

Last week, Thomas Herndon, an economics grad student, published a paper that refuted a renown economics paper authored by two Harvard professors on three accounts:

- some data was excluded from the analysis without stating the reasons;

- during processing of the data, a debatable method for weighting the data was used;

- there was a “coding error” – The authors had used MS Excel for their analysis and used a seemingly wrong range of cells for one calculation;

As far as I can tell, Mike Konczal was the first to write about the freshly published paper on April 16th.

First, Reinhart and Rogoff selectively exclude years of high debt and average growth. Second, they use a debatable method to weight the countries. Third, there also appears to be a coding error that excludes high-debt and average-growth countries. All three bias in favor of their result, and without them you don’t get their controversial result.

On April 17th, Arindrajit Dube, assistant professor at economics at the University of Massachusetts, Amherst (the same school of Thomas Herndon). He presents a short and concise analysis of the reasoning behind Herndon’s paper. One key analysis of his relates to the fact that different ranges of the data have a varying degree of dependence. In this case, the strength of the relationship [between growth and debt-to-GDP] is actually much stronger at low ratios of debt-to-GDP. From there he goes on to wonder about the causes of this changing relationship.

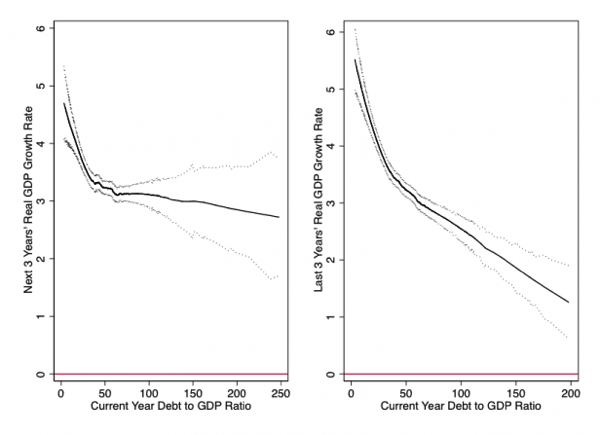

Here is a simple question: does a high debt-to-GDP ratio better predict future growth rates, or past ones? If the former is true, it would be consistent with the argument that higher debt levels cause growth to fall. On the other hand, if higher debt “predicts” past growth, that is a signature of reverse causality.

Future and Past Growth Rates and Current Debt-to-GDP Ratio. Figure’s source.

Looking at the data is one thing, but looking at causal relationships should always be related. A lot of people suggest that making data and analysis methods publicly available would prevent such errors. I agree to some extent. It is nice to see a re-analysis performed in python online. However, why did the authors not see these causal relationships? Did they not have enough time for a rigorous analysis? And would a rigorous analysis not be necessary for research that forms the basis for (current) political decisions?

Josef Perktold frames it in slightly different words (and also links to a post by Fernando Perez that examines the role of ipython and literate programming on reproducibility):

[…] it’s just the usual (mis)use of economics research results. Politicians like the numbers that give them ammunition for their position

and

“Believable” research: If your results sound too good or too interesting to be true, maybe they are not, and you better check your calculations. Although mistakes are not uncommon, the business as usual part is that the results are often very sensitive to assumptions, and it takes time to figure out what results are robust. I have seen enough economic debates where there never was a clear answer that convinced more than half of all economists. A long time ago, when the Asian Tigers where still tigers, one question was: Did they grow because of or in spite of government intervention?

Stephen Colbert, of course, has his own thoughts, and has invited Thomas Herndon to chat with him:

New post: #Research, #Reproducibility, #Data: http://t.co/Ue4Ha1Hog1

reinhartrogoff

planetwater

25 Apr 13 at 11:05 am